How we increased the read performance of our NodeJS client by 2x using Rust

Overview

At Kurrent, we take customer feedback seriously. When multiple users expressed concerns about the performance of our NodeJS client, we knew we needed to take action. Our NodeJS client was experiencing slower read operations than expected, impacting the overall performance of our users applications. This article details how we diagnosed the issue and leveraged Rust to significantly boost performance without requiring our users to change their code.

Identifying the Bottleneck

Our journey began when several customers reported that the NodeJS client for KurrentDB was experiencing slower read performance than they expected. We immediately began investigating, as delivering reliable performance is a top priority for us. Our goal was to ensure that clients could depend on KurrentDB for high-throughput applications.

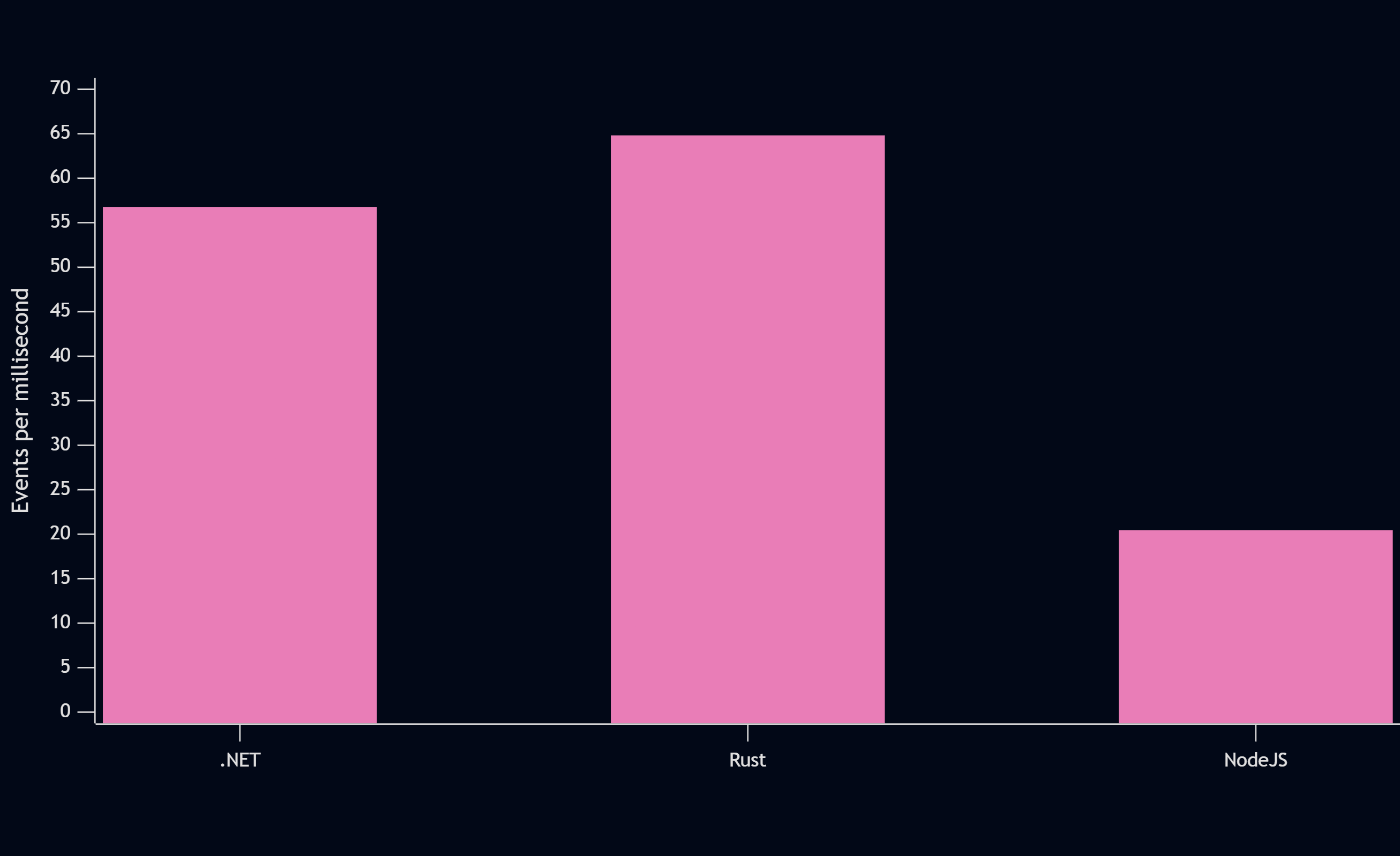

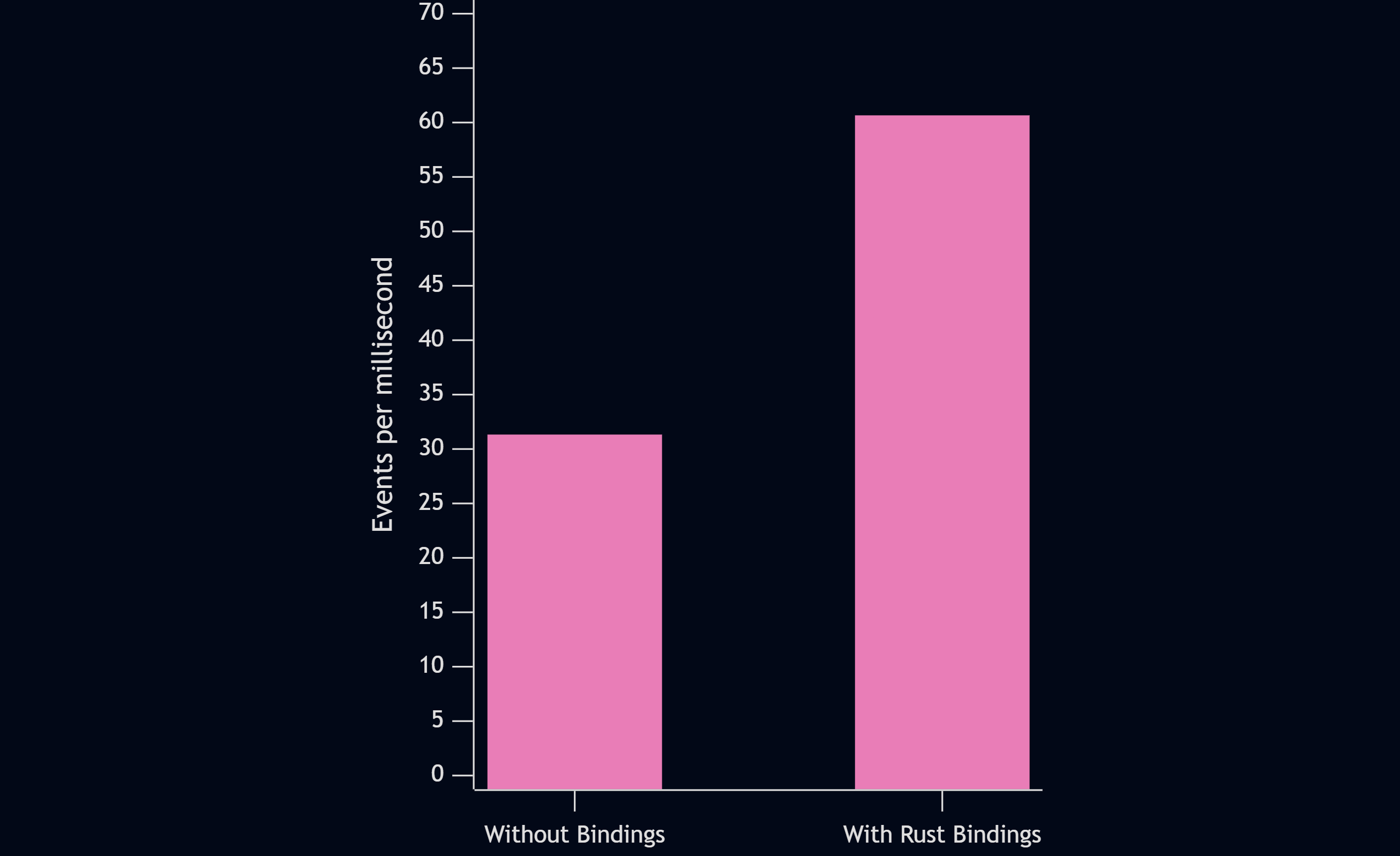

Our first step was to benchmark our NodeJS client against our other language clients to understand the scope of the problem. The results clearly showed the extent of the issue:

KurrentDB client read performance comparison across languages

When we looked at the benchmark results, it was obvious that our NodeJS client was only able to process ~20 events per millisecond. In comparison, the other clients performed much better. This matched the feedback we had been hearing from users, confirming that the NodeJS client was lagging behind our implementations in other languages.

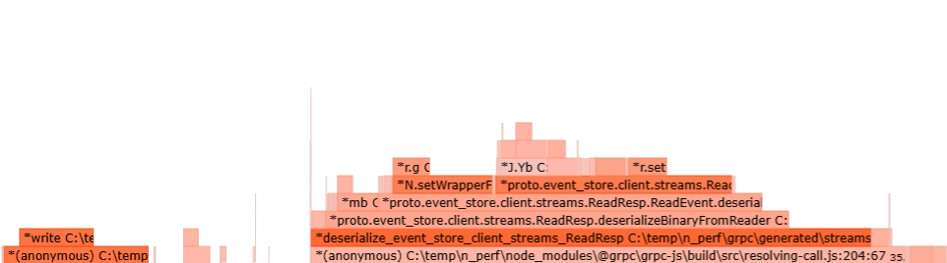

Curious about what was causing this gap, we decided to dig deeper. After running a profiling test on the NodeJS client, we found that most of the time was spent in the underlying gRPC library and the Node.js runtime itself. The actual work of constructing recorded event objects accounted for only a tiny fraction of the total processing time.

To further isolate the issue, we reran the profiling after removing all of our own functions, leaving only the underlying gRPC client. The results were nearly identical, suggesting that the performance limitations originated deeper within the stack.

Profiling results of the NodeJS client

Finding a Solution

We wanted to improve read performance without disrupting the developer experience or requiring changes to existing application code. Our initial approach was to see if we could optimize within the constraints of pure JavaScript. We considered options like switching to an alternative gRPC transport, but this would have required substantial changes with no guarantee of improved performance.

Given that our Rust client was already the fastest implementation available, we started exploring ways to reuse its core for performance-critical operations in the NodeJS environment. Rust’s strengths in systems-level performance, memory safety, and concurrency made it an ideal candidate for bridging this gap.

Rather than rewrite the entire NodeJS client or introduce a separate service layer, we chose a more seamless path by integrating Rust directly into the NodeJS runtime using Neon. Since we already had a client written in Rust, much of the core logic was ready to go and we just needed to wrap it for use in NodeJS. This allowed us to compile key logic into a native addon and expose high-performance Rust functions to our JavaScript code with minimal overhead.

This hybrid approach gave us the best of both worlds. We kept the developer friendliness and ecosystem of Node.js together with the raw performance and reliability of Rust where it mattered most.

Building the Rust Bridge Module

Our goal was to keep the familiar NodeJS API that our users already knew, while moving the performance-critical parts over to Rust. This way, users didn’t have to change any of their code. We replaced the original read implementation with a much faster Rust module, using Neon to connect NodeJS with our Rust code and combine the ease of JavaScript with the speed of Rust.

This meant writing Rust functions that accept parameters from Node.js, convert them into the appropriate Rust client types, and call the relevant KurrentDB client methods. These functions are then exported as part of a Neon module and used by our Node.js application.

One such function, read_stream, handles reading from a stream with support for various options passed in from JavaScript. Here’s an excerpt illustrating the key parts:

pub fn read_stream(client: Client, mut cx: FunctionContext) -> JsResult<JsPromise> {

let stream_name = cx.argument::<JsString>(0)?.value(&mut cx);

let params = extract_params(&mut cx)?;

let mut options = ReadStreamOptions::default();

options = parse_direction(params, &mut cx)?;

options = parse_position(params, &mut cx)?;

options = parse_credentials(params, &mut cx)?;

options = apply_misc_flags(params, options, &mut cx)?;

let options = Options::Regular {

stream_name,

options,

};

read_internal(client, options, cx)

}In this function, FunctionContext acts as our bridge to the JavaScript runtime. It lets us grab arguments passed in from Node.js, create new JavaScript values, and handle errors when needed. For example, we use it to pull out the stream name and any optional parameters like fromRevision, direction, or credentials that the user might provide.

Once our Rust functions are ready, we expose them to JavaScript by registering them in the Neon module entry point:

#[neon::main]

fn main(mut cx: ModuleContext) -> NeonResult<()> {

cx.export_function("createClient", client::create)?;

cx.export_function("readStreamNext", client::read_stream_next_mutex)?;

Ok(())

}These exported function names are what we use when importing and calling them from the Node.js client.

You can find the detailed implementation of the Rust bridge client here.

The Results

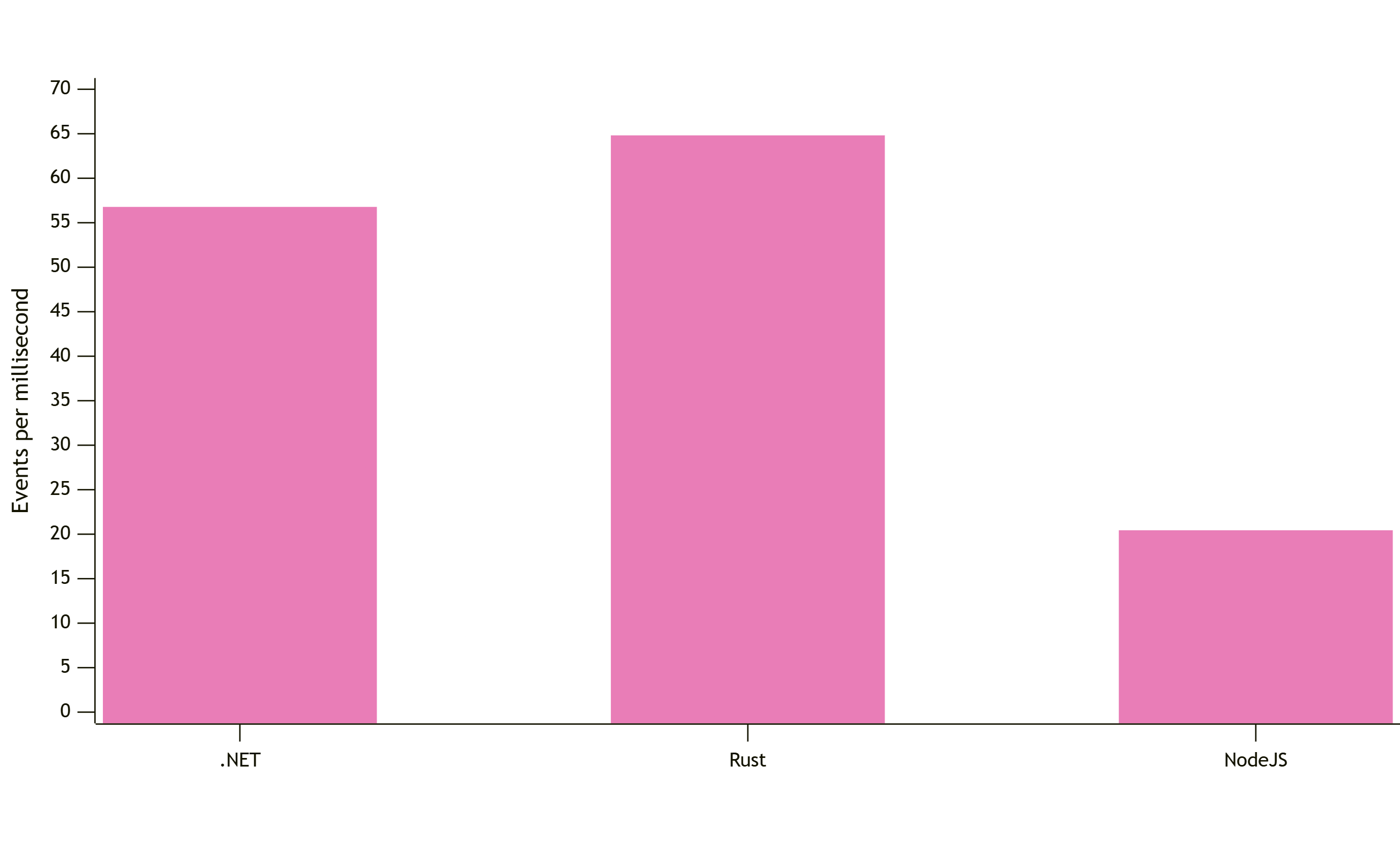

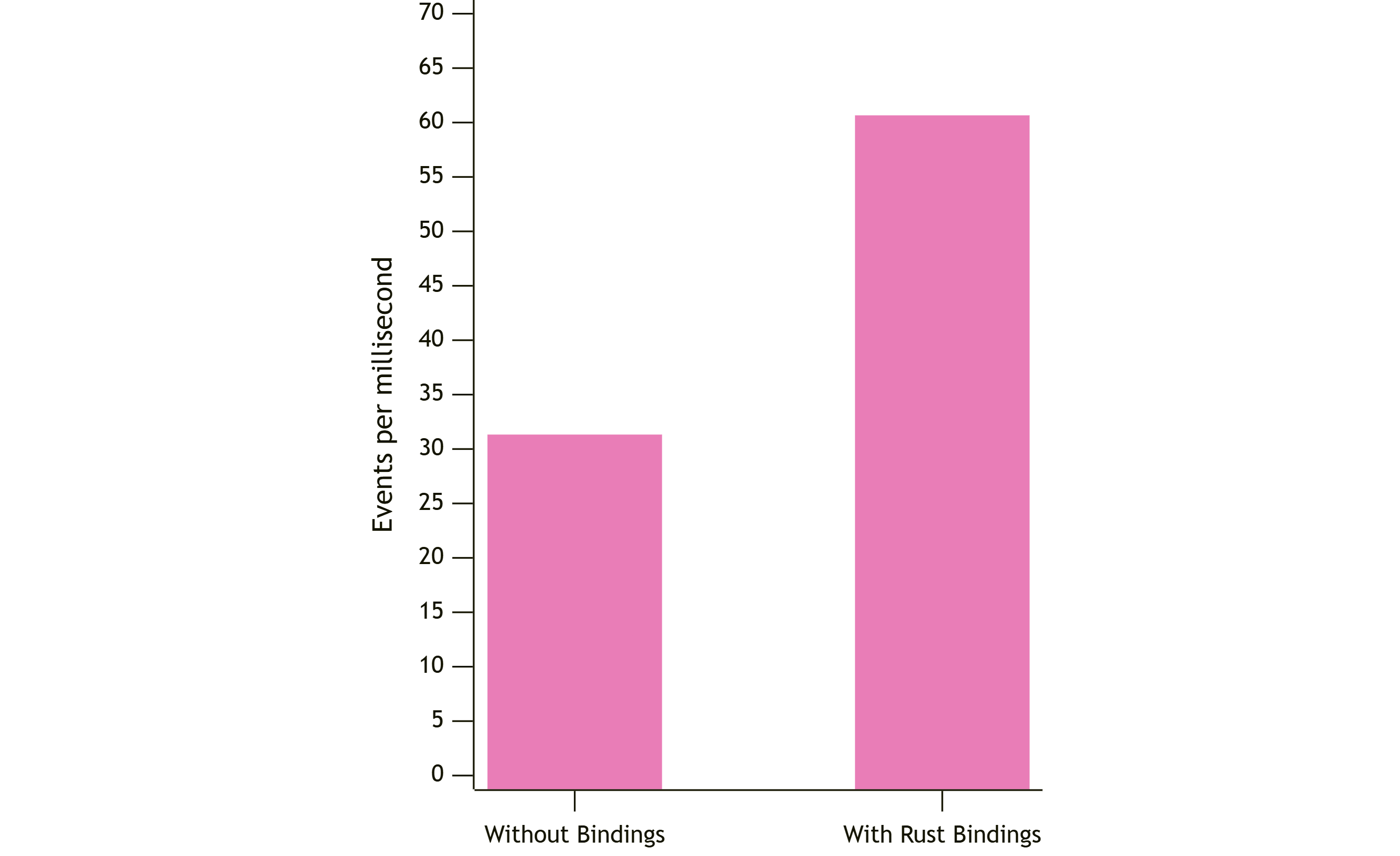

After implementing and thoroughly testing our new hybrid solution, we saw dramatic improvements in performance:

NodeJS client read performance before and after rust integration

Our new hybrid NodeJS client nearly doubled the performance, jumping from ~31 events per millisecond to ~60 events per millisecond. This puts our NodeJS client on par with or even outperforming some of our other language implementations.

What This Means for Our Users

For our users, this performance improvement means:

- Faster read operations

- Lower latency for real-time applications

- Reduced resource utilization

- Better scaling for high-throughput scenarios

All of this comes without any need to change application code or learn a new API. The improvements are completely transparent to existing applications.

Final Words

This journey from identifying a performance problem to delivering a solution that nearly doubles throughput demonstrates our commitment to providing the best possible experience for our users. By combining the developer-friendly nature of NodeJS with the raw performance of Rust, we’ve created a client that gives you the best of both worlds.

We’re continuing to explore additional ways to improve performance and reliability for our clients, and would love to hear your feedback. If you’re currently using our NodeJS client, we encourage you to upgrade to the latest KurrentDB Client to take advantage of these significant performance improvements.